Context

I stumbled on this book on my way to Yangon and devoured the book with in a few hours. It took me more time to write the summary than to read the book. The book is 300 pages long and full credit goes to the author for making the book so interesting.In this post, I will attempt to summarize the contents of the book.

This book is a fascinating adventure into all the aspects of the brain that are messy and chaotic. These aspects ultimately result in an illogical display of human tendencies – in the way we think, the way we feel and the way we act. The preface of the book is quite interesting too. The author profoundly apologizes to the reader on a possible scenario, i.e. one in which the reader spends a few hours on the book and realizes that it as a waste of time. Also, the author is pretty certain that some of the claims made in the book would become obsolete very quickly, given the rapid developments happening in the field of brain sciences. The main purpose of the book is to show that the human brain is fallible and imperfect despite the innumerable accomplishments that it has bestowed upon us. The book talks about some of the every day common experiences we have and ties it back to the structure of our brain and the way it functions.

Chapter 1: Mind Controls

How the brain regulates the body, and usually makes a mess of things

The human brain has evolved over several thousands of years. The brain’s function was to ensure survival of the body and hence most of the functions of the brain were akin to that of a reptile, and hence the term reptilian brain. The reptilian brain comprises Cerebellum and and the Brain Stem. However the nature and size of the human brain has undergone rapid changes in the recent past. As the nature of food consumption changed, i.e. from raw food to cooked food, the size of the brain increased, which in turn made humans seek out more sophisticated types of food. With survival related problems having been taken care of, the human brain developed several new areas as compared to its ancestors. One of the most important developmental area is the area named neo-cortex. If one were to dissect the brain on an evolutionary time scale; the reptilian brain came first and later, the neo-cortex.

Humans have a sophisticated array of senses and neurological mechanisms. These give rise to proprioception, the ability to sense how our body is currently arranged, and which parts are going where. There is also, in our inner ear, the vestibular system that helps detect balance and position. The vestibular system and proprioception, along with the input coming from our eyes are used by the brain to distinguish between an image we see while running and a moving image seen while stationary. This interaction explains the reason we throw up during motion sickness. While sitting in a plane or a ship, there is movement, even though our body is stationary. The eyes transmit these moving signals to the brain and so does the vestibular system but the proprioreceptors do not send any signal as our body parts are stationary. This creates confusion in the brain and leads to the classic conclusion that there is poison and it has to be removed from the body.

The brain’s complex and confusing control of diet and eating

Imagine we have eaten a heavy meal and our stomach is more than full, when we spot the dessert. Why do most of us ahead and gorge on the dessert? It is mainly because our brains have a disproportionate say on our appetite, and interfere in almost every food related decision. Whenever the brain sees a dessert, its pleasure pathways are activated and the brain makes an executive decision to eat the dessert and override any signals from the stomach. This is also the way protein milkshakes work. As soon as they are consumed, the dense stuff fills up the stomach, expanding it in the process and sending an artificial signal to the brain that the body is fed. Stomach expansion signals however are just one small part of diet and appetite control. They are the bottom rung of a long ladder that goes all the way up to the more complex elements of the brain. Appetite is also determined by a collection of hormones secreted by the body, some of which pull in opposite directions; the ladder therefore occasionally zigzags or even goes through loops on the way up. This explains why many people report feeling hungry less than 20 minutes after drinking a milk shake!

Why does almost every one eat between 12 and 2 pm, irrespective of the kind of work being done? One of the reasons is that the brain gets used to the pattern of eating food between those time slots and it expects food once the routine is established. This not only works for pleasant things but also for unpleasant things. Subject yourself to the pain of sitting down every day and doing some hard work like working through some technical stuff, programming, playing difficult musical notes, etc. Once you do it regularly, the brain ignores the initial pain associated with the activity making it easy to pursue such activities.

The takeaway of this section is that the brain interferes in eating and this can create problems in the way food is consumed.

The brain and complicated properties of sleep?

Why does a person need sleep? There are umpteen number of theories that have been put across. The author provides an interesting narrative of each, but subscribes to none. Instead he focuses on what exactly happens in our body that makes us sleep. The pineal gland in the brain secretes the hormone melatonin, which is involved in the regulation of circadian rhythms. The amount of secretions is inversely proportional to light signals passing through our eyes. Hence the secretions rise as day light fades and the increased secretion levels lead to feelings of relaxation and sleepiness.

This is the mechanism behind jet-lag. Travelling to another time zone means you are experiencing a completely different schedule of daylight, so you may be experiencing 11 a.m. levels of daylight when your brain thinks it’s 8 p.m. Our sleep cycles are very precisely attuned, and this throwing off of our melatonin levels disrupts them. And it’s harder to ‘catch up’ on sleep than you’d think; your brain and body are tied to the circadian rhythm, so it’s difficult to force sleep at a time when it’s not expected (although not impossible). A few days of the new light schedule and the rhythms are effectively reset.

Why do we have REM sleep? For adults, usually 20% of sleep is REM sleep and for children, 80% of sleep is REM sleep. One of the main functions of REM sleep is to reinforce, organize and maintain memories.

The brain and the fight or flight response

The thalamus can be considered the central hub of the brain. Information via the senses enters the thalamus from where it is sent to the cortex and further on to the reptilian brain. Sensory information is also sent to the amygdala, an area that is said to be the seat of EQ. If there is something wrong in the environment, even before the cortex can analyze and respond to it, the amygdala invokes the hypothalamus which in turn invokes certain types of nerve systems that generate the fight or flight response such as dilating our pupils, increasing our heart rate, shunting blood supply away from peripheral areas and directing it towards muscles etc.

The author provides a good analogy for the thalamus system:

If the brain were a city, the thalamus would be like the main station where everything arrives before being sent to where it needs to be. If the thalamus is the station, the hypothalamus is the taxi rank outside it, taking important things into the city where they get stuff done

Chapter 2: Gift of memory

One hears the word "memory" thrown in a lot of places, especially among devices such as computers, laptops, mobile phones etc. Sometimes our human memory is wrongly assumed to be similar to a computer memory/ hard drive where information is neatly stored and retrieved. Far from it, our memory is extremely convoluted. This chapter talks about various intriguing and baffling properties of our brain’s memory system.

The divide between long-term and short-term memory

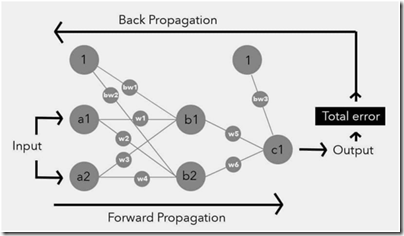

The way memories are formed, stored and accessed in the human brain, like everything else in the brain, is quite fascinating. Memory can be categorized into short term memory/ working memory and long term memory. Short term memory is anything that we hold in our minds for not more than a few seconds. There are tons of stuff that we think through out the day and it stays in our short term memory and then disappears. There is no physical basis for short term memories. These are associated with areas in the prefrontal cortex where they are captured as neuronal activity. Since short term memory is always in constant use, anything in the working memory is captured as neuronal activity changes very quickly and nothing persists. But then how do we have memories? This is where long term memory comes in. In fact there are a variety of long term memories.

Each of the above memories are processed and stored in various parts of the brain. The basal ganglia, structures lying deep within the brain, are involved in a wide range of processes such as emotion, reward processing, habit formation, movement and learning. They are particularly involved in co-ordinating sequences of motor activity, as would be needed when playing a musical instrument, dancing or playing basketball. The cerebellum is another area that is important for procedural memories.

An area of the brain that plays a key role in encoding the stuff that we see/hear/say/feel to memories is the hippocampus. This encodes memories and slowly moves them to the cortex where they are stored for retrieval. Episodic memories are indexed in the hippocampus and stored in the cortex for later retrieval. If everything is in some form or the other is stored in the memory, why are certain aspects more easily to recall and other difficult? This depends on the richness, repetition and intensity of the encoding. These factors will make something harder or easier to retrieve.

The mechanisms of why we remember faces before names

I have had this problem right from undergrad days. I can remember faces very well but remembering names is a challenge. I have tough time recollecting names of colleagues, names of places that I visit, names of various clients I meet. I do find this rather painful when my mind just blanks out recalling someone’s name. The chapter gives a possible explanation – the brain’s two-tier memory system that is at work retrieving memories.

And this gives rise to a common yet infuriating sensation: recognising someone, but not being able to remember how or why, or what their name is. This happens because the brain differentiates between familiarity and recall. To clarify, familiarity (or recognition) is when you encounter someone or something and you know you’ve done so before. But beyond that, you’ve got nothing; all you can say is this person/thing is already in your memories. Recall is when you can access the original memory of how and why you know this person; recognition is just flagging up the fact that the memory exists. The brain has several ways and means to trigger a memory, but you don’t need to ‘activate’ a memory to know it’s there. You know when you try to save a file onto your computer and it says, ‘This file already exists’? It’s a bit like that. All you know is that the information is there; you can’t get at it yet.

Also visual information conveyed by the facial features of a person is far richer and sends strong signals to the brain as compared to auditory signals such as the name of a person. Short term memory is largely aural whereas long term memory relies on vision and semantic qualities.

How alcohol can actually help you remember things

Alcohol increases release of dopamine and hence causes the brain’s pleasure pathways to get activated. This in turn creates an euphoric buzz and that’s one of the states that alcoholics seek. Alcohol also leads to memory loss. So, when does it exactly help you to remember things? Alcohol dims out the activity level of the brain and hence lessens its control on various impulses. It is like dimming the red lights on all the roads in a city. With the reduced intensity, humans tend to do many more things that they would not do while sober. Alcohol disrupts the hippocampus, the main region for memory formation and encoding. However regular alcoholics get used to a certain level of consumption. Hence any interesting gossip that an alcoholic gets to know an inebriated state, he/she is far likely to remember if his body is in an inebriated state. So, in all such situations, alcohol might actually help you remember things. The same explanation also applies to caffeine-fuelled all-nighters – take a caffeine just before the exam so that the internal state of the body is the same as the one in which the information was crammed. Once you are taking an examination in a caffeinated state, you are likely to remember stuff that you crammed in a caffeinated state.

The ego-bias of our memory systems

This section talks about the way the brain alters memories to suit a person’s ego. It tweaks memories in such a way that it flatters the human ego and over a period of time these tweaks can become self-sustaining. This is actually very dangerous. What you retrieve from your mind changes, to flatter your ego. This means that it is definitely not a wise thing to trust your memories completely.

When and how the memory system can go wrong

The author gives a basic list of possible medical conditions that might arise, if the memory mechanism fails in the human brain. These are

-

False memories – that can be impanted in our heads by just talking

-

Alzheimers – associated with significant degeneration of the brain

-

Strokes can have effect on hippocampus thus leading to memory deficit

-

Temporal lobe removal can cause permanent loss of long term memories

-

Amnesia formed by virus attacks on hippocampus – anterograde

Chapter 3: Fear

Fear is something that comes up in a variety of shades as one ages. During childhood, it is usually fear of not meeting expectations from parents and teachers, of not fitting in a school environment etc. As one moves on to youth, it is usually the fear of not getting the right job, and fear arising out of emotional and financial insecurities. As one trudges into middle age, fears revolve around meeting the financial needs of the family, avoiding getting downsized, fear of conformity, fear of not keeping healthy etc. At each stage, we somehow manage to conquer fear by experience or live with it. However there are other kinds of fears that we carry on, through out our lives. Even though our living conditions have massively improved, our brains have not had chance to evolve. Hence there are many situations where out brains are primed to think of potential threats even when there aren’t any. You look at people who have strange phobias, people believing in conspiracy theories, having bizarre notions; all these are creations of our idiot brain.

The connection between superstition, conspiracy theories and other bizarre beliefs

Apophenia involves seeing connections where there aren’t. This to me seems to be manifestation of Type I errors in real life. The author states that it is our bias towards rejecting randomness that gives rise to superstitions, conspiracy theories etc. Since humans fundamentally find it difficult to embrace randomness, their brains cook up associations where there are none and make them hold on to the assumptions to give a sense of quasi-control in this random and chaotic world.

Chapter 4: The baffling science of intelligence

I found this chapter very interesting as it talks about an aspect that humans are proud about, i.e. human intelligence.There are many ways in which human intelligence is manifested. But as far as tools are concerned, we have limited set of tools to measure intelligence. Whatever tools we do have do not do a good job of measurement. Take IQ for example. Did you know that average IQ for any country is 100. Yes, it is a relative scale. It means that if a deadly virus kills all the people in a country who have IQ > 100, the IQ of the country still remains 100 because it is a relative score. The Guinness Book of Records has retired the category of “Highest IQ” because of the uncertainty and ambiguity of the tests.

Charles Spearman did a great service for intelligent research in 1920’s by developing factor analysis. Spearman used a process to assess IQ tests and discovered that there was seemingly one underlying factor that underpinned test performance. This was labelled the single general factor, g, and if there’s anything in science that represents what a layman would think of as intelligence, it’s g. But even g does not equate to all intelligence. There are two types of intelligence that are generally acknowledged by the research and scientific community – fluid intelligence and crystallized intelligence.

Fluid intelligence is the ability to use information, work with it, apply it, and so on. Solving a Rubik’s cube requires fluid intelligence, as does working out why your partner isn’t talking to you when you have no memory of doing anything wrong. In each case, the information you have is new and you have to work out what to do with it in order to arrive at an outcome that benefits you. Crystallised intelligence is the information you have stored in memory and can utilise to help you get the better of situations. It has been hypothesized that Crystallised intelligence stays stable over time but fluid intelligence atrophies as we age.

Knowledge is knowing that a tomato is a fruit; wisdom is not putting it in a fruit salad. It requires crystallised intelligence to know how a tomato is classed, and fluid intelligence to apply this information when making a fruit salad.

Some scientists believe in "Multiple Intelligence" theories where intelligence could be of varying types. Sportsmen have different kind of intelligence as compared to chess masters who have different sort of intelligence from instrument artists etc. Depending on what one chooses to spend time on, the brain develops a different kind of intelligence – just two categories (fluid and crystallized) is too restrictive to capture all types of intelligence. Even though the "Multiple Intelligence" theory appears plausible, the research community has not found solid evidence to back this hypothesis.

Why Intelligent people often lose arguments ?

The author elaborates on Impostor syndrome, a behavior trait in intelligent people. One does not need a raft of research to believe that the more you understand something, the more you realize that you know very little about it. Intelligent people by the very way they have built up their intelligence are forever skeptical and uncertain about a lot of things. In a way their arguments seem to be balanced rather than highly opinionated. On the other hand, when you see less intelligent people, the author points out that the one can see Dunning-Kruger effect in play.

Dunning and Kruger argued that those with poor intelligence not only lack the intellectual abilities, they also lack the ability to recognise that they are bad at something. The brain’s egocentric tendencies kick in again, suppressing things that might lead to a negative opinion of oneself. But also, recognising your own limitations and the superior abilities of others is something that itself requires intelligence. Hence you get people passionately arguing with others about subjects they have no direct experience of, even if the other person has studied the subject all their life. Our brain has only our own experiences to go from, and our baseline assumptions are that everyone is like us. So if we’re an idiot…

Crosswords don’t actually keep your brain sharp

The author cites fMRI based research to say that intelligent brains use less brain power to think or solve through problems. While doing complex tasks, the brain activity in a set of people who are posed equi-challenging tasks showed that intelligent people could solve all the challenging tasks with out any increase in brain power. On the other hand, brain activity increased only when the task complexity increased. All these activity scans arising out prefrontal cortex scans show that it is performance that matters rather than power. There’s a growing consensus that it’s the extent and efficiency of the connections between the regions involved (prefrontal cortex, parietal lobe and so on) that has a big influence on someone’s intelligence; the better he or she can communicate and interact, the quicker the processing and lower the effort required to make decisions and calculations. This is backed up by studies showing that the integrity and density of white matter in a person’s brain is a reliable indicator of intelligence. Having given this context, the author goes on to talk about plasticity of the brain and how musicians develop a certain aspect of motor cortex after spending years of practice.

While the brain remains relatively plastic throughout life, much of its arrangement and structure is effectively set. The long white-matter tracts and pathways will have been laid down earlier in life, when development was still under way. By the time we hit our mid-twenties, our brains are essentially fully developed, and it’s fine-tuning from thereon in. This is the current consensus anyway. As such, the general view is that fluid intelligence is ‘fixed’ in adults, and depends largely on genetic and developmental factors during our upbringing (including our parents attitudes, our social background and education). This is a pessimistic conclusion for most people, especially those who want a quick fix, an easy answer, a short-cut to enhanced mental abilities. The science of the brain doesn’t allow for such things.

To circle back to the title of the section, solving crosswords will help you become good at that task alone. Working through brain games would help you become good at that specific game alone. The brain is complex enough that just by involving in a specific activity, it does not increase all the connections across the brain and hence the conclusion that solving crosswords might make you good in that specific area but it doesn’t go anything good to the overall intelligence. Think back to those days when you knew some of your friends who could crack crossword puzzles quickly. Do a quick check on where they are now and what’s their creative output so far and judge for yourself.

The author ends the chapter by talking about one phenomenon usually commented upon – tall people perceived as being smarter than shorter people, on an average. He cites many theories and acknowledges that none are conclusive enough to validate the phenomenon.

There are many possible explanations as to why height and intelligence are linked. They all may be right, or none of them may be right. The truth, as ever, probably lies somewhere between these extremes. It’s essentially another example of the classic nature vs nurture argument.

Chapter 5: Did you see this chapter coming ?

The information that reaches our brain via the senses is often more like a muddy trickle rather than perfect representation of the outside world. The brain then does an incredible job of creating a detailed representation of the world based on this limited information. This process itself depends on many peculiarities of an individual brain and hence errors tend to creep in. This chapter talks about the way information is reconstructed by our brains and the errors that can creep in during this process.

Why smell is powerful than taste ?

Smell is often underrated. It is estimated that humans have a capacity to smell up to 1 trillion odours. Smell is in fact the first sense that evolves in a foetus.

There are 12 facial nerves that link the functions of the face to the brain. One of them is the Olfactory nerve. Olfactory neurons that make up the olfactory nerve are unique in many ways – these are one of the few types of neurons that can regenerate. They need to regenerate because they are directly in contact with the external world and hence atrophy. The olfactory nerve sends electrical signals to the olfactory bulb, which relays information to olfactory nucleus and piriform cortex.

In the brain, the olfactory system lies in close proximity to the limbic system and hence certain smells are strongly associated with vivid and emotional memories. This is one of the reasons why marketers carefully choose odour in display stores in order to elicit purchases from the prospects. One misconception about smell is that it can’t be fooled but research has proven that there are in fact olfactory illusions. Smell does not operate alone. Smell and taste are classed as "chemical" senses, i.e. the receptors respond to specific chemicals. There are have been experiments where subjects were unable to distinguish between two completely different food items when their olfactory senses were disabled. Think about all the days where you had a bad cold and you seem to have lost the sense of taste. The author takes a dig at wine tasters and says that all their so called abilities are a bit overrated.

How hearing and touch are related ?

Hearing and touch are linked at a fundamental level. They are both classed as mechanical senses, meaning they are activated by pressure or physical force. Hearing is based on sound, and sound is actually vibrations in the air that travel to the eardrum and cause it to vibrate.

The sound vibrations are transmitted to the cochlea, a spiral-shaped fluid-filled structure, and thus sound travels into our heads. The cochlea is quite ingenious, because it’s basically a long, curled-up, fluid-filled tube. Sound travels along it, but the exact layout of the cochlea and the physics of sound waves mean the frequency of the sound (measured in hertz, Hz) dictates how far along the tube the vibrations travel. Lining this tube is the organ of Corti. It’s more of a layer than a separate self-contained structure, and the organ itself is covered with hair cells, which aren’t actually hairs, but receptors, because sometimes scientists don’t think things are confusing enough on their own. These hair cells detect the vibrations in the cochlea, and fire off signals in response. But the hair cells only in certain parts of the cochlea are activated due to the specific frequencies travelling only certain distances. This means that there is essentially a frequency ‘map’ of the cochlea, with the regions at the very start of the cochlea being stimulated by higher-frequency sound waves (meaning high-pitched noises, like an excited toddler inhaling helium) whereas the very ‘end’ of the cochlea is activated by the lowest-frequency sound waves. The cochlea is innervated by the eighth cranial nerve, named the vestibulocochlear nerve. This relays specific information via signals from the hair cells in the cochlea to the auditory cortex in the brain, which is responsible for processing sound perception, in the upper region of the temporal lobe.

What about touch ?

Touch has several elements that contribute to the overall sensation. As well as physical pressure, there’s vibration and temperature, skin stretch and even pain in some circumstances, all of which have their own dedicated receptors in the skin, muscle, organ or bone. All of this is known as the somatosensory system (hence somatosensory cortex) and our whole body is innervated by the nerves that serve it.

Also touch sensitivity isn’t uniform through out the body. Like hearing, the sense of touch can also be fooled.The close connection between touch and hearing means that often if there is a problem in one, there tends to be the problem with the other.

What you didn’t know about the visual system ?

The visual system is the most dominating of all the senses and also the most complicated. If you think about the retina, only 1% of the area (fovea) can digest the finer details of the visual and the rest of the 99% of the area takes in hazy peripheral details of the visual. It is just amazing that our brain can construct the image by utilizing vast amount of peripheral detail data and make us feel that we are watching a crystal clear image. There are many aspects of visual processing mentioned in this chapter that makes you wonder at this complex mechanism that we use every day. When we move our eyes from left to right, even though we see one smooth image, the brain actually receives a series of jerky scans and it then recreates a smooth image.

Visual information is mostly relayed to the visual cortex in the occipital lobe, at the back of the brain. The visual cortex itself is divided into several different layers, which are themselves often subdivided into further layers. The primary visual cortex, the first place the information from the eyes arrives in, is arranged in neat ‘columns’, like sliced bread. These columns are very sensitive to orientation, meaning they respond only to the sight of lines of a certain direction. In practical terms, this means we recognise edges. The secondary visual cortex is responsible for recognising colours, and is extra impressive because it can work out colour constancy. It goes on like this, the visual-processing areas spreading out further into the brain, and the further they spread from the primary visual cortex the more specific they get regarding what it is they process. It even crosses over into other lobes, such as the parietal lobe containing areas that process spatial awareness, to the inferior temporal lobe processing recognition of specific objects and (going back to the start) faces. We have parts of the brain that are dedicated to recognising faces, so we see them everywhere.

The author ends this section by explaining the simple mechanism by which our brain creates a 3D image from a 2D information on the retina. This mechanism is exploited by the 3D film makers to create movies for which we end up paying more money than the usual movie ticket.

Strength and Weaknesses of Human Attention

There are two questions that are relevant to the study of attention.

Two models have been put forth for answering the first question and have been studied in various research domains. First is the Bottleneck model that says that all the information that gets in to our brains is channelled through a narrow space offered by attention. It is more like a telescope where you see a specific region but cut out all the information from other parts of the sky. Obviously this is not the complete picture in as far as our attention works. Imagine you are talking to a person in a party and somebody else mentions your name; your ears perk up and your attention darts to this somebody else and you want to know about what they are speaking about you.

To address the limitations of Bottleneck model, researchers have put forth a Capacity model that says that humans attention is finite and is available to be put to use across multiple streams of information so long as the finite resource is not exhausted. The limited capacity is said to be associated with the fact that we have limited working memory. So, can you indulge in multi-tasking without compromising on the efficiency of tasks? Not necessarily. If you have trained certain tasks to have procedural memory, then probably you can do such tasks AND do some other tasks that require conscious attention.Think about preparing a dish that you have done umpteen number of times and suddenly your brain is thinking of something completely different. So, it is possible to increase the kind of attention on a task only after committing some parts of the task to procedural memory. All this might sound very theoretical but I see this work in my music practice. Unless I build muscle memory of a raag, let us say the main piece of a raag and some of the standard phrases in a raag, there is no way I can progress to improvisations.

About the second question, most of the attention is directed to what we see. It is obvious in a way. Our eyes carry most of the signals to our brains. This is a "top-down" approach to why we pay attention. We see something and we pay attention. There is also another kind of approach – a "bottom-up", where something detected as biological significance can make us pay attention without even the conscious parts of the brain having any say on it. This makes sense as our reptilian brain needed to have paid to various stimuli before even consciously processing it.

Now, where does the idiocy of the brain come in ? There are many examples cited in the chapter, one being the "door man" experiment. The experiment is a classic reminder that when we are so tunnel focused that we sometimes miss something very apparent that’s going on in the external environment. The way I relate to this experiment is – one needs to be focused and free from distraction when one is doing a really challenging task. But at the same time, it is important to scan the environment a bit and see whether it makes sense to approach the task in the same manner that you are approaching. In other words, distancing your self from the task from time to time is the key to doing the task well. I came across a similar idea in Anne Lamott’s book – Bird by Bird. Anne Lamott mentions an incident when she almost gives up on a book, takes a break, comes back to it after a month and finishes off the book in style. Attention to a task is absolutely important but beyond a point can prove counter-productive.

Chapter 6: Personality

Historically people believed that brain had nothing to do with a person’s personality. This was until Phineas Gage case surfaced in 1850’s – Phineas Gage underwent a brain injury and subsequently his mannerisms changed completely. Since then many experiements have shown that brains do have a say in the personality. The author points out to many problems measuring the direct impact of the same on personality.

The questionable use of personality tests

Personality patterns across diverse set of individuals are difficult to infer. However there are certain aspects of personalities where we see a surprising set of common patterns. According to the Big 5 Traits theory, everyone seems to fall between two extremes of the Big 5 Traits

-

Openness

-

Conscientiousness

-

Extrovert

-

Aggreableness

-

Neurotic

Now one can easily dismiss the above traits saying that they are too reductionist in nature. There are many limitations of Big 5 Theory. This theory is based on Factor Analysis, a tool that tells us the sources of variation but does not say about anything about the causation. However whether the brain evolves to suit the personality types or personalities evolve based on the brain structure is a difficult question to answer. There are many such personality tests such Type A/ Type B, Myers-Briggs Type Inventory etc. Most of these tests have been put together by amateur enthusiasts and somehow they have become popular. The author puts together a balanced account of several theories and seems to conclude that most personality tests are crap.

How anger works for you and Why it can be good thing ?

The author cites various research studies to show that anger evokes signals in the left and right brain. In the right hemisphere it produces negative, avoidance or withdrawal reactions to unpleasant things, and in the left hemisphere, positive and active behaviour. Research shows that venting anger reduces cortisol levels and relaxes the brain. Suppressing anger for a long time might cause a person to overreact to harmless situations. So, does the author advocate venting anger in every instance? Not really. All that this section does is to point out research evidence that sometimes venting out anger is ok.

How different people find and use motivation ?

How does one get motivated to do something? This is a very broad question that will elicit a variety of answers. There are many theories and the author gives a whirlwind tour of all. The most basic of all, is that humans tend to do activities that involve pleasure and avoid those that involve pain. It is so simplistic in nature that it definitely was the first theory to be ripped apart. Then came Maslow’s theory of hierarchy which looks good on paper. The fancy pyramid of needs that every MBA student reads is something that might seem to explain motivational needs. But you can think of examples from your own life when motivation to do certain things did not neatly fit the pyramid structure. Then there is this theory of extrinsic and intrinsic motivation. Extrinsic motivations are derived from the outside world. Intrinsic motivations drive us to do things because of decisions or desires that we come up within ourselves. Some studies have shown that money can be a demotivating factor when it comes to performance. Experiments have shown that subjects without a carrot perform well and seemed to have enjoyed tasks well, as compared to subjects with a carrot. There are some other theories that talk about ego gratification as the motivating factor. Out of all the theories and quirks that have been mentioned in the chapter, the only thing that has kind of worked in my life is Zeigarnik effect, where the brain really doesn’t like things being incomplete. This explains why TV shows use cliff-hangers so often; the unresolved storyline compels people to tune into the conclusion, just to end the uncertainty. To give another example, many times I have stopped doing something when I badly wanted to work more on it. This has always been the best option in the hindsight. Work on something to an extent that you leave some of it incomplete. This gives the motivation to work on it the next day.

Chapter 7: Group Hug

Do we really need to listen to other people to understand or gauge their motives? Do facial expressions give away intentions? This is one of the questions tackled in this chapter. It was believed for a long time that the speech processing areas in the brain are Broca’s area, named for Pierre Paul Broca, at the rear of the frontal lobe,and Wernicke’s area, identified by Carl Wernicke, in the temporal lobe region.

Damage to these areas produced profound disruptions in speech and understanding. For many years these were considered the only areas responsible for speech processing. However, brain-scanning technology has changed and since then many new developments have occurred. Broca’s area, a frontal lobe region, is still important for processing syntax and other crucial structural details, which makes sense; manipulating complex information in real-time describes much of the frontal lobe activity. Wernicke’s area, however, has been effectively demoted due to data that shows the involvement of much wider areas of the temporal lobe around it.

via Neuroscience.com

Although the field as a whole has made tremendous progress in the past few decades, due in part to significant advances in neuroimaging and neurostimulation methods, we believe abandoning the Classic Model and the terminology of Broca’s and Wernicke’s areas would provide a catalyst for additional theoretical advancement.

Damage to Broca’s and Wernicke’s area disrupts the many connections between language-processing regions, hence aphasias. But that language-processing centres are so widely spread throughout shows language to be a fundamental function of the brain, rather than something we pick up from our surroundings. Communication, though involves non-verbal cues. Many experiments conducted on aphasia patients prove that that non-verbal cues can easily be inferred by facial expressions and thus it is difficult to fake by just talking. Your faces give away your true intentions. The basic theory behind facial expressions is that there are voluntary facial expressions and involuntary facial expressions. Poker players are excellent in controlling voluntary facial expressions and train their brains to control a certain kind of involuntary facial expressions. However we do not have full control on the involuntary facial expressions and hence an acute observer can easily spot our true intentions by paying attention to facial expressions.

The author explores situations such as romantic breakups, fan club gatherings and situations where we are predisposed to cause harm to others. The message from all these narratives is that our brain gets influenced by people around us in ways we cannot fathom completely. People around you influence the way you think and act. The aphorism – you tell me who your friends, I will tell you who you are – resonates clearly in various examples mentioned in the chapter.

Chapter 8: When the Brain breaks down

All the previous chapters in the book talk about how our brain is an idiot, when functioning in a normal way. The last chapter of the book talks about situations when the brain stops functioning in a normal way. The author explores various mental health issues such as depression, drug addiction, hallucination and nervous breakdown. The chapter does a great job of giving a gist of the competing theories out there to explain some of the mental health issues.

Takeaway

Takeaway

For those whose work is mostly cerebral in nature, this book is a good reminder that we should not identify with our brains or trust our brains beyond a certain point. A healthy dose of skepticism towards whatever our brain makes us feel, think and do, is a better way to lead our lives. The book is an interesting read, with just enough good humor spread across, and with just enough scientific details. Read the book if you want to know to what extent our brains are idiosyncratic and downright stupid in their workings.