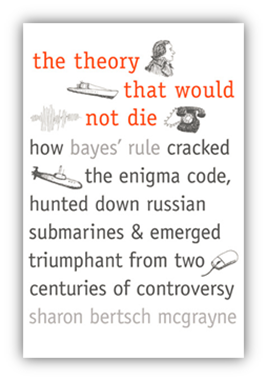

The book by sharon bertsch mcgrayne, is about Bayes’ theorem stripped off the math associated with it. In today’s world, statistics even at a rudimentary level of analysis (not referring to research but preliminary analysis) comprises forming a prior and improving it based on the data one gets to see. In one sense modern statistics takes for granted that one starts off with a set of beliefs and improves the beliefs based on the data. When this sort of technique or thinking was first introduced, it was considered equivalent to pseudo-science or may be voodoo science. During 1700s when Bayes’ theorem came to everybody’s notice, Science was considered extremely objective, rational and all the words that go with it. On the other hand, Bayes’ was talking about beliefs and improving the beliefs based on data. So, how did the world come to accept this perspective of thinking? In today’s world, there is not a single domain that is untouched by Bayesian Statistics. In finance and technology specifically, Google Search engine algos + Gmail spam filtering , Net flix recommendation service in e-tailing space , Amazon book recommendations, Black-Litterman model in finance, Arbitrage models based on Bayesian Econometrics etc. are some of the innumerable areas where Bayes’ philosophy is applied. Carol Alexander in her book on Risk Management says that, world needs Bayesian Risk Managers and remarks that , “Sadly most of risk management that is done is frequentist in nature” .

You pick any book on Bayes and the first thing that you end up reading is about prior and posterior distributions. There is no history about Bayes that is mentioned in many books. It is this void that the book aims to fill and it does so with a fantastic narrative about the people who rallied for and against a method that took 200 years to get vindicated. Let me summarize the five parts of the book. This is probably the lengthiest post I have ever written for a non-fiction book, the reason being , I would be referring to this summary from time to time as I hope to apply Bayes’ at my work place.

Part I – Enlightenment and Anti-Bayesian Reaction

Causes in Air

The book starts off with describing conditions around which Thomas Bayes wrote down the inverse probability problem. Meaning deducing the cause from the effects, deducing the probabilities given the data, improving prior beliefs based on data. The author speculates that there could be have multiple personalities that would have motivated Bayes to think on the inverse probability problem like the David Hume’s philosophical essay / deMoivre book on chances / Earl of Stanhope / Issac Newton’s concern that he did not explain the cause of gravity etc. Instead of publishing or sending it to Royal Society, he let it lie amidst his mathematical papers. Shortly after his death, his relatives ask Richard Price to look in to the various works that Bayes had done. Price subsequently got interested in the inverse probability work and polished it , added various references , and made it publication ready . He subsequently sent it off to Royal Institute for publication.

|

|

|

Thomas Bayes (1701-1776) |

Richard Price (1723-1791) |

The Bayes’ theory is different from the frequentist theory in the sense that, you start with a prior belief about an event, collect data and then improve the prior probability. It is different from the frequentist view as frequentists typically do the following :

-

Hypothesise a probability distribution – a random variable of study is hypothesized to have a certain probability distribution. Meaning, there is no experiment that is conducted but all the possible realized values of the random variable are known. This step is typically called Pre-Statistics( David Williams Terminology from the book “Against the Odds” )

-

One uses actual data to crystallize the Pre-Statistic and subsequently reports confidence intervals.

This is in contrast with Bayes where a prior is formed based on one’s beliefs and data is used to update the prior beliefs. So, in that sense, all the p-values etc that one usually ends up reading in college texts are useless in Bayesian world.

The Man who did Everything

![clip_image002[4] clip_image002[4]](https://rkbookreviews.files.wordpress.com/2011/08/clip_image0024_thumb.jpg?w=100&h=132) Pierre-Simon Laplace (1749 – 1827)

Pierre-Simon Laplace (1749 – 1827)

Baye’s formula and Price reformulation of Baye’s work would have been in vain , had not it been for one genius, Pierre Simon Laplace, the Issac Newton of France. The book details the life of Laplace where he independently develops Bayes’ philosophy and then on stumbling upon the original work tries to apply IT to everything that he could possibly think of. Ironically, Baye’s used his methodology to prove the existence of God while Laplace used it to prove God’s non-existence(in a way ). In a strange turn of events, at the age of 61 Laplace became a frequentist statistician as data collection mechanism improved and mathematics to deal with rightly measured data was easier in the case of frequentist approach. Thus a person who was responsible for giving mathematical life to Bayes’ theory turned in to a frequentist for the last 16 years of his life. All said and done, Laplace single handedly created a gazillion concepts, theorems, tricks in statistics that influenced the mathematical developments in France. He is credited with Central Limit theorem, generating functions, etc , terms that are casually tossed around in today’s statisticians.

Many Doubts, Few Defenders

Laplace launched a craze for statistics by publishing some ratios and numbers in the French society like number of dead letters in postal system, number of thefts, number of suicides, proportion of men to women etc and declaring that most of them were almost constant. Such numbers increased French Government’s appetite to consume more numbers and soon there were plethora of men and women who started collecting numbers, calling them statistics. Well, no one cared to attribute the effects to a particular cause; no one cared whether one can use probability to qualify lack of knowledge. The pantheon of data collectors lead quickly to an amazing amount of data and subsequently the concept that probability was based on frequency of occurrence took precedence over any other definition of probability. In the modern parlance, the long term frequency count became synonymous with probability. Also in the case of large data, the Bayesian and frequentist stats match and hence there was all the more reason to push Bayesian stats in to oblivion that was anyway based on subjective beliefs. Objective frequency of events was far more plausible than any sort of math that was based on belief systems. By 1850, with in two generations of his death, Laplace was remembered largely for astronomy. Not a single copy of his treatise on probability was available anywhere in Parisian bookstores.

1870s- 1880s-1890s were dead years for Bayes’ philosophy. This was the third death for Bayes’ principle. First was when Thomas Bayes did not share with anyone and it was idle amongst his research papers. The second death for the principle was, subsequent to Price publication of Bayes’ in scientific journals and the third death happened by 1850s as theoreticians rallied against it.

Precisely during these years, 1870s – 1910s , Bayes’ theory was silently getting used in real life applications and with great success. It was getting used by French army and Russian artillery officers to fire their weapons. There were many uncertain factors in firing artillery, like enemy’s precise location, air density, wind directions etc. Joseph Louis Bertrand a mathematician in French army put Bayes’ to use and published a textbook on the artillery firing procedures that was used by French and Russian army for the next 60 years till the Second World War. Another area of application was Telecommunications. An engineer in Bell Labs created a cost effective way of dealing with uncertainty in call handling based on Bayes’ principles. The US insurance industry was another place where Bayes’ was effectively used. A series of laws were passed between 1911-1920 obligating employers to provide insurance covers to employees. With hardly any data available, pricing the insurance premium became a huge problem. Issac Rubinow provided some relief in this situation by manually classifying records from Europe and thus helping to tide over the crisis for 2-3 years. In the mean time, he created an actuarial society which started using Bayesian philosophy to create Credibility score, a simple statistic that could be understood, calculated and communicated easily amongst people. This is like the implied volatility in Black Scholes formula. Irrespective of whether any one understands Black Scholes replication argument or not, one can easily talk / trade / form opinions based on the implied volatility of an option. Issac Robinow helped create one such statistic for the insurance industry that was in vogue for atleast 50 years.

Despite the above achievements, Bayes’ was nowhere in prominence. The trio that was responsible for near death-knell of Bayes’ principle were Ronald Fisher, Egon Pearson and Jerzy Neyman

![clip_image002[6] clip_image002[6]](https://rkbookreviews.files.wordpress.com/2011/08/clip_image0026_thumb.jpg?w=95&h=125) |

![clip_image004[4] clip_image004[4]](https://rkbookreviews.files.wordpress.com/2011/08/clip_image0044_thumb.jpg?w=95&h=125) |

|

|

Ronald Aylmer Fisher |

Egon Pearson |

Jerzy Neyman |

The fight between Fisher and Karl Pearson is a well known story in statistical world. Fisher accused Bayes’ theory saying that it was utterly useless. He was a flamboyant personality and a vociferous in his opinions. He single handedly created a ton of statistical concepts like randomization, degrees of freedom etc. The most important contribution of Fisher was that he made stats available to scientist and researchers who did not have the time / did not possess skills in statistics. His manual became a convenient way to conduct a scientific experiment. This was in marked contrast to Bayes’ principle where the scientist has to deal with priors and subjective beliefs. During the same time when Fisher was developing frequentist methods, Egon Pearson and Jerzy Neyman introduced hypothesis testing that helped a ton of people working in the labs to reject alternate or null hypothesis using a framework where the basic communication of a test result was through p values. 1920s- 1930s was the golden age for frequency based statistics. Considering the enormous influence of the above personalities, Bayes’ was nowhere to be seen.

However far from the enormous visibility of frequentist statisticians, there was another trio who were silently advocating and using Bayes’ philosophy. Emile Borel , Frank Ramsey and Bruno De Finetti.

|

|

|

|

Emile Borel |

Frank Ramsey |

Bruno De Finetti |

Emile Borel was applying Bayes’ probability to insurance, biology, agriculture, physics problems. Frank Ramsey was applying the concepts to economics using utility functions. Bruno De Finetti was firmly of the opinion that subjective beliefs can be quantified at the race track. This trio kept Bayes’ alive in a few far removed circles of English statistical societies.

The larger credit however goes to the geo-physicist Harold Jeffrey’s who single handedly kept Bayes’ alive during the anti-Bayesian onslaught of 1930s and 1940s. His personality was quiet and gentlemanly and thus he could coexist with FisherJ . Instead of trying to distance away from Fisherian stats, Jeffrey was of the opinion that some principles like Maximum Likelihood function from Fisher’s armory were equally applicable to Bayesian Stats. However he was completely against p-values and argued against anyone using them. Statistically, lines were drawn. Jeffreys and Fisher, two otherwise cordial Cambridge professors embarked on a two-year debate in the Royal Society proceedings. Sadly the debate ended inconclusively and frequentism totally eclipsed Bayes’. By 1930s Jeffrey’s was truly a voice in the wilderness. So, this becomes the fourth death of Bayes’ theory.

Part II – Second World War Era

Bayes’ goes to War

The book goes on describe the work of Alan Turing who used Bayesian methods to locate the German U boats, breaking enigma codes in the Second World War.

His work at Bletchley park along with other cryptographers validated Bayesian theory. Though Turing and others did not use the word Bayesian, almost all of their work was Bayesian in Spirit. Once the Second World War came to an end, the group was ordered to destroy all the tools built, manuscripts written, manuals published etc. Some of the important documents became classified info and none of the Bayesian stuff could actually be talked in public and hence Bayesian theory remained in oblivion despite its immense use in World War II.

Dead and Buried Again

Making the second world war work as classified information, Bayes’ was again dead. Till mid 1960s there was not a single article on Bayesian Stats that was readily available to scientists for their work. Probability was applied only to long sequence of repeatable events and hence frequentist in nature. Bayes theory’ was ignored by almost everyone by mid 1960s and a statistician during this period meant a frequentist who studies asymptotics and talked in p values, confidence intervals, randomization, hypothesis testing etc… Prior and Posterior distribution were terms that were no longer considered useful. Bayes’ theory was dead for the fifth time!

Part III – The Glorious Revival

Arthur Bailey

Arthur Bailey, an insurance actuary was stunned to see the insurance premiums were actually using Bayes’ theorem, something considered as a heresy in the schools where he learnt stuff. He was hell bent on proving the whole concept flawed and want to hoist the flag of frequentist stats on insurance premium calculations. After struggling for a year, he realized that Bayes’ was the right way to go. From then on, he massively advocated Bayes’ principles in insurance industry. He was vocal about it , published stuff and let everyone know that Bayes’ was infact a wonderful tool to price insurance premiums. Arthur Bailey’s son Robert Bailey also helped spread Bayesian stats by using it to rate a host of things. In time, the insurance industry accumulated enormous amount of data that Bayes’ rule , like the slide rule , became obsolete.

From Tool to Theology

Bayes’ stood poised for another of its periodic rebirths as three mathematicians Jack Good, Leonard Jimme Savage and Dennis V Lindley tackled the job of turning Bayes’ rule in to a respectable form of mathematics and a logical coherent methodology.

![clip_image002[10] clip_image002[10]](https://rkbookreviews.files.wordpress.com/2011/08/clip_image00210_thumb.jpg?w=110&h=133) |

![clip_image004[6] clip_image004[6]](https://rkbookreviews.files.wordpress.com/2011/08/clip_image0046_thumb.jpg?w=94&h=123) |

![clip_image006[4] clip_image006[4]](https://rkbookreviews.files.wordpress.com/2011/08/clip_image0064_thumb.jpg?w=118&h=133) |

|

Jack Good |

Jimmie Savage |

Dennis V Lindley |

Jack Good being Turing’s wartime assistant knew the power of Bayes’ and hence started publishing and making Bayes’ theory known to a variety of people in Academia. However his work was still classified info. Hampered by governmental secrecy and his inability to explain his work, Good remained an independent voice in the Bayesian community. Savage on the other hand was instrumental in spreading Bayesian stats and making a legitimate mathematical framework for analyzing small data events. He also wrote books with precise mathematical notion and symbols thus formalizing Bayes’ theory. However the books did not become famous or were not widely adopted as computing machinery to implement ideas were not available. Dennis Lindley on the other hand pulled off something remarkable. In Britain he started forming small group of Bayesian circles and started pushing for Bayesian appointments in stats department. This feat in itself is something for which Lindley needs to be given enormous credit as he was taking on the mighty Fisher, in his own home turf, Britain.

Thanks to Lindley in Britain and Savage in US, Bayesian theory came of age in 1960s. The philosophical rationale of using Bayesian methods had been largely settled. It was becoming the only mathematics of uncertainty with an explicit, powerful and secure foundation in logic. “How to apply it? “, though remained a controversial question.

Jerome Cornfield, Lung Cancer and Heart Attacks

![clip_image008[4] clip_image008[4]](https://rkbookreviews.files.wordpress.com/2011/08/clip_image0084_thumb.jpg?w=89&h=115) Jerome Cornfield was the next iconic figure who used Bayes’ to a real life problem. He successfully showed the linkage between smoking and lung cancer, thus introducing Bayes’ to epidemiology. He was probably one of the first guys to take Fisher and win arguments against Fisher. Fisher was unilaterally against Bayes’ and hence had written an arguments against Cornfield’s work. Cornfield with the help of his fellow statisticians proved all the arguments of Fisher baseless thus resuscitating Bayes’. Cornfields rose to head the American Statistician association and this in turn gave Bayes’ a stamp of legitimacy , infact a stamp of superiority over frequentist statistics. Or atleast it was thought that way.

Jerome Cornfield was the next iconic figure who used Bayes’ to a real life problem. He successfully showed the linkage between smoking and lung cancer, thus introducing Bayes’ to epidemiology. He was probably one of the first guys to take Fisher and win arguments against Fisher. Fisher was unilaterally against Bayes’ and hence had written an arguments against Cornfield’s work. Cornfield with the help of his fellow statisticians proved all the arguments of Fisher baseless thus resuscitating Bayes’. Cornfields rose to head the American Statistician association and this in turn gave Bayes’ a stamp of legitimacy , infact a stamp of superiority over frequentist statistics. Or atleast it was thought that way.

There’s always a first time

frequentist stats, by its very definition cannot assign probabilities to events that have never happened. “What is the probability of an accidental Hydrogen bomb collision?” , This was the question on Madansky’s mind, a PhD student under Savage. As a final resort, he had to adopt Bayes theory to answer the question. The chapter shows that Madansky computed posterior probabilities of such an event and published his findings. His findings were taken very seriously and many cautious measures were undertaken. The author is of the view that Bayes’ thus averted many cataclysmic disasters.

46656 Varieties

There was an explosion in Bayesian stats around 1960s and Jack Good estimated that there 46,656 different varieties of definitions of Bayes’ that were prevalent. One can see that Statisticians were grappling with Bayes’ stats as it was not as straightforward as frequentist world. Stein’s paradox created further more confusion. Stein was an anti-bayesian and had published a paradox which looks like pro-bayes’ formula. Stein’s shrinkage estimator smelled like a Bayes formula but the creator did not credit Bayes for it. Thus there were quite a few problems in computing using Bayes’ framework. Most importantly was actually integrating a complex density form. This was 1960s and computing power eluded statisticians. Most of the researchers had to come up with tricks, nifty solutions to tide over laborious calculations. Conjugacy was one such concept that was introduced to make things tractable. Conjugacy is a property where prior and posterior share the same family of distribution thus making estimation and inference a little easier. 1960s and 1970s were the times when academic interest in Bayesian Statistics was in full swing. Periodicals, Journals, Societies started forming to work on, espouse, and test various Bayesian concepts. The extraordinary fact about the glorious Bayesian revival of the 1950s and 1960s is how few people in any field publicly applied Bayesian theory to real-world problems. As a result, much of the speculation about Bayes’ rule was moot. Until they could prove in public that their method was superior, Bayesians were stymied.

Part IV – To Prove its Worth

Business Decisions

Even though there were a ton of Bayesians around in 1960s, there were no real life examples to which Bayes’ had been used. Even for simple problems, computer simulation was a must. Hence devoid of computing power and practical applications, Bayes’ was still considered a heresy. There were a few who were gutsy enough to spend their lives in changing this situation. This chapter talks about two such individuals

These two Harvard professor set on a journey to use Bayes’ to Business Statistics. Osher Schlaifer was a faculty in the accounting and business department. He was randomly assigned to teach statistics course. Knowing nothing about it Schlaifer crammed away the frequentist stats and subsequently wondered about its utility in the real life. Slowly he realized that in business one always deals with a prior and develops better probabilities based on the data one gets to see in the real world. This logically bought him to Bayes’ world. The math was demanding and Schlaifer immersed in math to understand every bit of it. He also came to know about a young prof at Columbia, Howard Raiffa. He pitched to Harvard to recruit Raiffa and subsequently brought him over to Harvard. For about 7 years, both of them created a lot of practical applications to Bayesian methods. The professors realized that there was no mathematics toolbox for Bayesians to work and hence went on developing a lot of concepts which could make Bayesian computations easy. They also published books on applications of Bayesian statistics to management, detailed notes on Markov chains etc. They also tried inculcating Bayesian stuff in to curriculum. Despite these efforts, Bayes could not really permeate through academia for various reasons that are mentioned in this chapter. It is also mentioned that some students burnt the lecture notes in front of professor’s office to give vent to their frustration.

Who Wrote the Federalist?

What is the Federalist Puzzle ? Between 1787 and 1788 , three founding fathers of the United States, Alexander Hamilton, John Jay and James Madison, anonymously wrote 85 newspaper articles to persuade New York State voters to ratify the American Constitution. Historians could attribute most of the essays but no one agreed whether Madison or Hamilton had written 12 others. Fredrick Mosteller at Harvard was excited by this classification problem and chose to immerse in the problem for 10 years. He roped in David Wallace from Chicago University in this massive research exercise. To this day, the Federalist is quoted as THE CASE STUDY that one can refer to, for the technical armory and depth of Bayesian statistics. Even though the puzzle exists to this day, it is the work that went behind it that showed the world that Bayesian stats was powerful in analyzing real life hard problems. One of the conclusions from the work was the prior did not matter. It’s the learning from the data that mattered. Hence it served as a good reminder to all the people who criticized Bayes’ philosophy because of its reliance of subjective priors. As an aside, the chapter brings out interesting elements of Fredrick Mosteller’s life and there are a lot of inspiring elements in the way he worked. The life of Mosteller is a must read for every statistician.

This chapter talks about another missed opportunity for Bayes’ to become popular. The section profiles Tukey, sometimes referred to as Picasso of statistics. He seemed to have extensively used Bayesian stats in his military work, his election prediction work etc. However he chose to keep his work confidential, partly because he was obliged to, and partly out of his own choice. The author gives enough pointers to make the reader infer that Tukey was an out and out Bayesian

Three Mile Island

Despite its utility in the real world, 200 years later, people were still avoiding the word Bayes’. Even though everyone used the method in some form or the other, no one openly declared it. This changed after the civilian nuclear power plant collapse, a possibility that was very remote according to frequentist world, but Bayesians predicted it .This failure recognized that having subjective beliefs and working out posterior probability is not a heresy.

The Navy Searches

This is one of the longest chapters in the book and it talks about the ways in which Navy put to use Bayesian methods to locate lost objects like Hydrogen Missiles, Submarines etc. In the beginning even though Bayes’ was used, no one was ready to publicize the same. Bayes’ was still a taboo word. Slowly over a period of time when Bayes’ was used more effectively to locate things lying on the ocean floor, Bayes’ started to get enormous recognition in navy circles. Subsequently Bayes’ was being used to track moving objects, which also proved to be very successful. For the first time Monte Carlo methods were being extensively used to calculate posterior probabilities. This was at least 20 years before it excited academia. Also cute tricks like conjugacy were being quickly incorporated to calculate summaries of posterior probabilities.

Part V – Victory

Eureka

There were five near-fatal deaths to Bayes theory. Bayes had shelved it, Price published it but was ignored, Laplace discovered his own version but later favored frequency theory, frequentists virtually banned it and the military kept it secret. By 1980s data was getting generated at an enormous rate in different domains and hence statisticians and frequentists were faced the “curse of dimensionality” problem. Fischerian and Pearson methods applicable to data with a few variables were felt inadequate with the explosive growth of factors for the data. How to separate the signal from the noise? , became a vexing problem. Naturally the analyzed data sets in the academia were the ones with fewer variables. Contrastingly, others such as physical and biological scientists analyzed massive amounts of data about plate tectonics, pulsars, evolutionary biology, pollution, the environment, economics, health, education, and social science.Thus Bayes’ was stuck again because of its complexity.

In such a situation, Lindley and his student Adrian F Smith showed Bayesians the way to develop hierarchical models.

The hierarchical models fell flat in the face as the models were too specialized and stylized for many scientific applications. (It would be another 20 years before students began their preliminary understanding of Bayes’ by looking in to hierarchical models). Meanwhile, Adrian Raftery studied coal dust incident rates using Bayesian stats and was thrilled to publicize one of the Bayes’ strengths i.e evaluating competing models/hypothesis. Frequency-based statistics works well when one hypothesis is a special case of the other and both assumed gradual behavior. But when hypotheses are competing and neither is a special case of the other, frequentism is not as helpful, especially with data involving abrupt changes—like the formation of a militant union.

During the same period , 1985-1990 , image processing and analysis had become critically important for the military, industrial automation, and medical diagnosis. Blurry, distorted, imperfect images were coming from military aircraft, infrared sensors, ultrasound machines, photon emission tomography, magnetic resonance imaging (MRI) machines, electron micrographs, and astronomical telescopes. All these images needed signal processing, noise removal, and deblurring to make them recognizable. All were inverse problems ripe for Bayesian analysis. The first known attempt to use Bayes’ to process and restore images involved nuclear weapons testing at Los Alamos National Laboratory. Bobby R. Hunt suggested Bayes’ to the laboratory and used it in 1973 and 1974. The work was classified, but during this period he and Harry C. Andrews wrote a book, “Digital Image Restoration”, about the basic methodology; Thus there was an immense interest in image pattern recognition during these times.

Stuart Geman and Donald Geman were enthused on Bayes’ application to Pattern recognition after attending a seminar. They both went on to invent a technique called “Gibbs Sampling Method”

These were the beginning signs to the development of computational techniques in Bayes’. It was Smith who teamed up with Alan Gelfand and turned on the heat towards developing computational techniques. Ok , now a bit about Alan Gelfand.

Gelfand started working on EM (Expectation Maximization) algo. Well, the first time I came across EM algo was in Casella’s Statistical Inference book. It was very well explained. However there was one missing part in such books that can only be obtained by reading around the subject, “the historical info”. This chapter mentions that tidbit about EM which will make learning EM more interesting. It says

EM algorithm, an iterative system secretly developed by the National Security Agency during the Second World War or the early Cold War. Arthur Dempster and his student Nan Laird at Harvard discovered EM independently a generation later and published it for civilian use in 1977. Like the Gibbs sampler, the EM algorithm worked iteratively to turn a small data sample into estimates likely to be true for an entire population.

Gelfand and Smith saw the connection between Gibbs Sampler, Markov chains and Bayes’ methodology. They recognized that Bayes formulations, especially the integrations involved in Bayes’ could be successfully solved using a mix of Markov chains and Simulation techniques. When Smith spoke at a workshop in Quebec in June 1989, he showed that Markov chain Monte Carlo could be applied to almost any statistical problem. It was a revelation. Bayesians went into “shock induced by the sheer breadth of the method.” By replacing integration with Markov chains, they could finally, after 250 years, calculate realistic priors and likelihood functions and do the difficult calculations needed to get posterior probabilities.

While these developments were happening in statistics, an outsider might feel a little surprised at the slowness of the application of montecarlo methods to statistics as they were being used by Physicists from as early as 1930s. Fermi , a Nobel prize winning physicist was using Markov chains to study nuclear physics. He could not publish his stuff as it was classified info. However in 1949, Maria Goeppert, a physicist and a future Nobel winning scientist gave a public talk on Markov chains + simulations and its application to real world problems.

That same year Nicholas Metropolis, who had named the algorithm Monte Carlo for Ulam’s gambling uncle, described the method in general terms for statisticians in the prestigious Journal of the American Statistical Association. But he did not detail the algorithm’s modern form until 1953, when his article appeared in the Journal of Chemical Physics, which is generally found only in physics and chemistry libraries. Bayesians ignored the paper. Today, computers routinely use the Hastings–Metropolis algorithm to work on problems involving more than 500,000 hypotheses and thousands of parallel inference problems. Hastings was 20 years ahead of his time. Had he published his paper when powerful computers were widely available, his career would have been very different. As he recalled, “A lot of statisticians were not oriented toward computing. They take these theoretical courses, crank out theoretical papers, and some of them want an exact answer.” The Hastings–Metropolis algorithm provides estimates, not precise numbers. Hastings dropped out of research and settled at the University of Victoria in British Columbia in 1971. He learned about the importance of his work after his retirement in 1992.

Gelfand and Smith published their synthesis just as cheap, high-speed desktop computers finally became powerful enough to house large software packages that could explore relationships between different variables. Bayes’ was beginning to look like a theory in want of a computer. The computations that had irritated Laplace in the 1780s and that frequentists avoided with their variable-scarce data sets seemed to be the problem—not the theory itself.

The papers published by Gelfand and Smith were considered as landmark in the field of Statistics. They showed that Markov chains simulations applied to Bayes could basically solve any frequentist problem and more importantly, many other problems. This method was baptized as Markov chain Monte Carlo, or MCMC for short. The combination of Bayes and MCMC has been called “arguably the most powerful mechanism ever created for processing data and knowledge.”

After this MCMC birth in 1990s, statisticians could study data sets in genomics or climatology and make models far bigger than physicists could ever have imagined when they first developed Monte Carlo methods. For the first time, Bayesians did not have to oversimplify “toy” assumptions. Over the next decade, the most heavily cited paper in the mathematical sciences was a study of practical Bayesian applications in genetics, sports, ecology, sociology, and psychology. The number of publications using MCMC increased exponentially.

Almost instantaneously MCMC and Gibbs sampling changed statisticians’ entire method of attacking problems. In the words of Thomas Kuhn, it was a paradigm shift. MCMC solved real problems, used computer algorithms instead of theorems, and led statisticians and scientists into a world where “exact” meant “simulated” and repetitive computer operations replaced mathematical equations. It was a quantum leap in statistics.

Appreciation of the people behind techniques is important in any field. The takeaway from this chapter is that MCMC’s birth credit goes to

![]() Adrian F. Smith +

Adrian F. Smith + ![clip_image012[4] clip_image012[4]](https://rkbookreviews.files.wordpress.com/2011/08/clip_image0124_thumb.jpg?w=100&h=68) Alan Gelfand

Alan Gelfand

Bayes’ and MCMC found its application in genetics and a host of other domains. MCMC took off and Bayes was finally vindicated. One aspect that was still troubling people at large was the availability of software to work the computations. MCMC , Gibbs Sampler, Metropolis-Hastings all were amazingly good concepts that needed some general software that could be used for the same. The story gets interesting with the contribution from David Spiegelhalter

Smith’s student, David Spiegelhalter, was working in Cambridge at the Medical Research Council’s biostatistics unit. He had a rather different point of view about using Bayes’ for computer simulations. Statisticians had never considered producing software for others to be part of their jobs. But Spiegelhalter, influenced by computer science and artificial intelligence, decided it was part of his. In 1989 he started developing a generic software program for anyone who wanted to use graphical models for simulations. Spiegelhalter unveiled his free, off-the-shelf BUGS program (short for Bayesian Statistics Using Gibbs Sampling) in 1991.

Ecologists, sociologists, and geologists quickly adopted BUGS and its variants, WinBUGS for Microsoft users, LinBUGS for Linux, and OpenBUGS. Computer science, machine learning, and artificial intelligence also joyfully swallowed up BUGS. Since then it has been applied to disease mapping, pharmacometrics, ecology, health economics, genetics, archaeology, psychometrics, coastal engineering, educational performance, behavioral studies, econometrics, automated music transcription, sports modeling, fisheries stock assessment, and actuarial science.

A few more examples of Bayes formal adoption mentioned are:

- Federal Drug Administration (FDA) allows the manufacturers of medical devices to use Bayes’ in their final applications for FDA approval.

- Drug companies use WinBUGS extensively when submitting their pharmaceuticals for reimbursement by the English National Health Service.

- The Wildlife Protection Act was amended to accept Bayesian analyses alerting conservationists early to the need for more data.

- Today many fisheries journals demand Bayesian analyses.

- Forensic Sciences Services in Britain

Rosetta Stones

The last chapter is a recount of various applications that Bayes’ has been used successfully. This chapter alone will motivate anyone to keep a Bayesian mindset while solving real life problems using statistics.

Bayes has broadened to the point where it overlaps computer science, machine learning, and artificial intelligence. It is empowered by techniques developed both by Bayesian enthusiasts during their decades in exile and by agnostics from the recent computer revolution. It allows its users to assess uncertainties when hundreds or thousands of theoretical models are considered; combine imperfect evidence from multiple sources and make compromises between models and data; deal with computationally intensive data analysis and machine learning; and, as if by magic, find patterns or systematic structures deeply hidden within a welter of observations. It has spread far beyond the confines of mathematics and statistics into high finance, astronomy, physics, genetics, imaging and robotics, the military and antiterrorism, Internet communication and commerce, speech recognition, and machine translation. It has even become a guide to new theories about learning and a metaphor for the workings of the human brain.

Some of the interesting points mentioned in this chapter are:

-

In this ecumenical atmosphere, two longtime opponents—Bayes’ rule and Fisher’s likelihood approach—ended their cold war and, in a grand synthesis, supported a revolution in modeling. Many of the newer practical applications of statistical methods are the results of this truce.

-

Bradley Efron, the man behind bootstrapping, admitted that he had always been a Bayesian.

-

Mathematical game theorists John C. Harsanyi and John Nash shared a Bayesian Nobel in 1994.

-

Amos Tversky, 2002 Nobel Prize winner thought through Bayesian methods, though he reported the findings in frequentist terms

-

Crash courses in Bayesian concepts are being offered in Economics depts. at all Ivy League schools

-

Reniassance uses Bayesian approaches heavily for portfolio management and technical trading. Portfolio manager , Robert L Mercer states that ,” “RenTec gets a trillion bytes of data a day, from newspapers, AP wire, all the trades, quotes, weather reports, energy reports, government reports, all with the goal of trying to figure out what’s going to be the price of something or other at every point in the future. We want to know in three seconds, three days, three weeks, three months.The information we have today is a garbled version of what the price is going to be next week. People don’t really grasp how noisy the market is. It’s very hard to find information, but it is there, and in some cases it’s been there for a long, long time. It’s very close to science’s needle-in-a-haystack problem.”

-

Bayes’ has found a comfortable niche in high-energy astrophysics, x-ray astronomy, gamma ray astronomy, cosmic ray astronomy, neutrino astrophysics, and image analysis.

-

Biologists who study genetic variation are limited to tiny snippets of information reflect that Bayes is the manna from the heavens for such problems

-

Sebastian Thrun of Stanford built a driverless car named Stanley. The Defense Advanced Research Projects Agency (DARPA) staged a contest with a 2 million prize for the best driverless car; the military wants to employ robots instead of manned vehicles in combat. In a watershed for robotics, Stanley won the competition in 2005 by crossing 132 miles of Nevada desert in seven hours.

-

On the Internet Bayes has worked its way into the very fiber of modern life. It helps to filter out spam; sell songs, books, and films; search for web sites; translate foreign languages; and recognize spoken words.

-

A 1-million contest sponsored by Netflix.com illustrates the prominent role of Bayesian concepts in modern e-commerce and learning theory.

-

Google also uses Bayesian techniques to classify spam and pornography and to find related words, phrases, and documents.

-

The blue ribbons Google won in 2005 in a machine language contest sponsored by the National Institute of Standards and Technology showed that progress was coming, not from better algorithms, but from more training data. Computers don’t “understand” anything, but they do recognize patterns. By 2009 Google was providing online translations in dozens of languages, including English, Albanian, Arabic, Bulgarian, Catalan, Chinese, Croatian, Czech, Danish, Dutch, Estonian, Filipino, Finnish, and French.

What’s the future of Bayes ? In author’s words

Given Bayes’ contentious past and prolific contributions, what will the future look like? The approach has already proved its worth by advancing science and technology from high finance to e-commerce, from sociology to machine learning, and from astronomy to neurophysiology. It is the fundamental expression for how we think and view our world. Its mathematical simplicity and elegance continue to capture the imagination of its users.

The overall message from the last chapter is that Bayes’ is just starting and it will have a tremendous influence in the times to come.

Persi Diaconis , a Bayesian at Stanford says , "Twenty-five years ago, we used to sit around and wonder, ‘When will our time come?’ . Now we can say: ‘Our time is now.’ "

Bayes’ is used in many problem areas and this book provides a fantastic historical narrative of the birth-death process that Bayes’ went through before it was finally vindicated. The author’s sketch of various statisticians makes the book an extremely interesting read.

![clip_image002[8] clip_image002[8]](https://rkbookreviews.files.wordpress.com/2011/08/clip_image0028_thumb.jpg?w=93&h=115)

![clip_image002[12] clip_image002[12]](https://rkbookreviews.files.wordpress.com/2011/08/clip_image00212_thumb.jpg?w=94&h=115)

![clip_image004[8] clip_image004[8]](https://rkbookreviews.files.wordpress.com/2011/08/clip_image0048_thumb.jpg?w=110&h=115)

![clip_image006[6] clip_image006[6]](https://rkbookreviews.files.wordpress.com/2011/08/clip_image0066_thumb.jpg?w=115&h=115)

![clip_image008[6] clip_image008[6]](https://rkbookreviews.files.wordpress.com/2011/08/clip_image0086_thumb.jpg?w=97&h=115)

![clip_image002[14] clip_image002[14]](https://rkbookreviews.files.wordpress.com/2011/08/clip_image00214_thumb.jpg?w=84&h=115)

![clip_image004[10] clip_image004[10]](https://rkbookreviews.files.wordpress.com/2011/08/clip_image00410_thumb.jpg?w=78&h=115)

![clip_image006[8] clip_image006[8]](https://rkbookreviews.files.wordpress.com/2011/08/clip_image0068_thumb.jpg?w=115&h=115)

![clip_image008[8] clip_image008[8]](https://rkbookreviews.files.wordpress.com/2011/08/clip_image0088_thumb.jpg?w=171&h=115)

![clip_image010[4] clip_image010[4]](https://rkbookreviews.files.wordpress.com/2011/08/clip_image0104_thumb.jpg?w=101&h=115)

![clip_image014[4] clip_image014[4]](https://rkbookreviews.files.wordpress.com/2011/08/clip_image0144_thumb.jpg?w=78&h=115)

Leave a comment