There is a need to visualize higher dimensional spaces in various applied math problems, though one cannot give any physical meaning to such higher dimensional spaces. Our inability to see anything more than 3 dimensions does not mean we cannot visualize and understand multidimensional space. For a superb account of ways to visualize higher dimensional space, one can read “Flatland”, the classic book of a 2 dimensional world, which describes the experiences of a 2 dimensional square trying to come to terms with a 3 dimensional world. After reading “Flatland”, one becomes more open to understanding multi-dimensional spaces. Developing abstract thinking is the key for doing math/modeling and linear algebra being the study of multi-dimensional world becomes very important to enhance abstract thinking.

One typically come across matrices at a high school level or some basic math course. Some even go through a linear algebra course from a computational angle,i.e given a problem , one knows how to solve it, be it addition multiplication/inversion of matrices. Use QR /SVD/ LU decomposition to solve problems. In such an approach, though one develops analytic skills, the crucial thinking skills are lost out. What do I mean is this? Well, for example, Given two matrices, A and B, probably a high school kid/ college level student can multiply the matrices row times column way and produce C. He goes on to solve a lot of such multiplication problems and pats himself that he can solve any matrix multiplication problems in a jiffy. With computers today, he does not even need to know the odd row times column thing that is the crux of matrix multiplication. He punches in the matrices in to a calculator/ computer, and the computer throws out C which is the product of A and B.

Ask the same student, “ Why does matrix multiplication involve row times column operations ? Doesn’t it seem odd ? What is the motivation? “.In all probability he is going to draw a blank face. Many times when instructors come to a point of talking about matrix multiplication, there is some murmur in the class from students about the odd behaviour of matrix multiplication. Not many ask the reason for such a rule. They take it as granted and go through the grind of doing tons of exercises. Despite solving a lot of exercise problems, Despite cracking a few exams, what is lacking is the understanding of the principles behind as simple as matrix multiplication. This is the sad story of most of the math education that is happening world over. It is definitely the state of Indian education system for certain, as the students are being trained to be workhorses and they eventually become good at solving questions than posing interesting questions and then solving them . As one of my friend quips , “ We are good at solving a question paper set by others, We are seldom good at setting our own question paper and solving it “. Very true!

Books such as these should be read by teachers world over, starting from the high school level. The insights from such books should be used in the class so that a student can see the motivation behind various things learnt. Once an instructor/teacher provides motivation for the subject, the teaching material becomes a supplement to a specific direction. The thing that is actually taught really doesn’t matter as the student is likely to figure out stuff by himself, all the student needs is the right motivation and direction.

Anyways , coming back to my motivation to read this book. I was trying to understand the usage of Hilbert spaces in the context of Lebesgue integral and felt a great need to understand inner product spaces thoroughly. It’s explained very well in this book. Instead of merely talking about the way inner product spaces is dealt in this book, I will attempt to summarize the entire book because of two reasons. First, this is THE BEST MATH book that I have read till date. Secondly, it deserves a summary than a piece meal highlighter summary. Let me get started

Chapter 1 : Vector Spaces

The chapter comprises defining vector spaces and spelling forth the properties of a vector space.

A vector space is a set V along with an addition on V and a scalar multiplication on V such that the following properties hold good: Commutativity, Associativity, Additive Identity, Additive Inverse, Multiplicative Identity and Distributive property.

Properties of Vector Spaces

-

A vector space has a unique additive identity

-

Every element in the vector space has unique additive inverse

-

0 v = 0 for every v belonging to vector space

-

a0 = 0

-

(-1 )v = -v for every v belonging to vector space

Vector spaces by themselves could be very large and hence the concept of sub space is introduced. A subspace U of V is a space which satisfies the additive identity, closed under addition and scalar multiplication properties

The concept of direct sums is introduced subsequently. Suppose that U1, U2, …. Un are subspaces of V , then every element of V can be written as a linear combination of elements in U1, U2, …Un and the only way to write 0 is the sum of u1, u2, u3,…un where each of these elements are 0.

Chapter 2 : Finite Dimensional Vector Spaces

This chapter introduces span, linear independence, basis and dimensionality concepts. Span is any list of vectors whose linear combination gives rise to any vector in V. Linear independence is associated with a list if the only one specific way to combine the list of vectors to produce a 0 vector. Typically the number of elements in spanning list is more than the elements in the linear independent lists. Whenever they concur, they become the basis for the vector space. The length of the basis of the vector space is termed as the dimension of the vector space.

Chapter 3 : Linear Maps

One does not usually find the word “Linear Map” in the books on linear algebra. It is refreshing to see the concept of operators being introduced using Linear Map. Basically one can call anything that maps from a space V to space W, a linear operator, if it satisfies additivity and homogeneity property. The chapter subsequently gets in to vector space of linear maps by defining the additivity and scalar multiplication.

Null space and Range of linear map are defined along with various properties. Invertible map is then defined as a map which is both injective and surjective. Often books invoke the argument of determinant being 0 for non-invertibility, however the approach using linear map is elegant. It says that the linear map needs to be injective and surjective for it to be an invertible map. The highlight of this chapter is that it shows the motivation behind matrix multiplication. “Why do we multiply two matrices the way we multiply them? “ is neatly answered by showing that it is a by product of a property involving two linear maps. The chapter then goes on to define operator which is basically a map from a space to itself. This chapter is the key to understanding everything in the book.

Chapter 4 : Polynomials

This chapter does not contain any linear algebra specific stuff but contains some basic theorems about real and complex polynomials which are useful to the study of linear maps from a space to itself.

Chapter 5: Eigen Values and Eigen Vectors

Vector Spaces, Sub spaces, Span, Linear Independence, Dimension, Linear maps are all the concepts that are given as a preview to “Eigen vectors and Eigen values”, a fascinating topic of linear algebra,. They arise in the context of Invariant Subspaces, meaning , a transformation which maps a space to itself. The book makes a simple but profound statement, which any undergrad student / PhD Math person / faculty in math would concur.

A central goal of linear algebra is to show that given an operator T belonging to the space of linear operators on V, there exists a basis of V with respect to which T is a reasonably simple matrix. To make it more concrete, goal is to find out matrices of linear maps with as many 0’s as possible in its structure

When one looks at the matrix representation of a linear map, everything in the world of matrices starts becoming clear. This chapter makes the connection between matrices and eigen values. Well, why should matrix representation of a linear map have any connection with the eigen values ? Why should all of the diagonal elements of a matrix map be non zero for the map to be invertible ? What happens when the matrix map is a diagonal matrix? What can you say about the basis of the vector space if the matrix map is diagonal? All these questions are carefully answered in this chapter.

The highlight of this chapter is the proof to the statement that every operator on a finite dimensional non zero complex vector space has an eigen value. The proof does not mention determinants, characteristic equation , blah blah . Instead it uses the concept of linear independence for proving the theorem. Absolute charm when compared to what one finds in other books where intuition is lost after reading through pages and pages of proof.

Chapter 6: Inner Product Spaces

Norm of a vector is an alternative name for the length of the vector in the space. However the basic problem with norm is that it is not linear. To induce linearity in to the discussion, inner product is introduced in the chapter. Inner product is nothing but a generalization of dot product in multidimensional space.

An inner product on V is a function that takes each ordered pair(u, v) of elements of V to a number and has the following properties – positivity, definiteness, additivity, homogeneity, conjugate symmetry. Basically an inner product space V is a vector space V along with an inner product. One can define inner product in several ways as long as the definition has the properties of inner product. One usually comes across Euclidean inner product space but that does not mean that is the only type of inner product that can be defined. The chapter then defines norm in terms of inner product. Various inequalities such as Cauchy Schwartz, Triangle inequality are proved using the properties of inner products and norms.

Gram-Schmidt procedure is then introduced which takes a list of linearly independent vectors and turns in to a list of orthonormal vectors. When we hear the word orthonormal vectors, one thing that must be kept in mind is that it is assumed that we are taking about a pre-defined inner product space. The orthogonal complement is defined using the inner product space, which is then used to define orthogonal projection. This orthogonal projection of a vector in V in to a subspace U is used in multitude of applications. So all the concepts are interlinked: inner product-norm-orthonormal basis- GramSchmidt – Orthogonal complement of U – Orthogonal projection. This connection is shown with the help of a an example, series expansion of sin(x). Personally, I found this example to be the BEST. It’s a simple example but an extremely insightful example which shows the connection between all the ideas in the chapter.

How do you represent sin(x) in a polynomial form ? The first thing that comes to anyone’s mind is Taylor series expansion, which every undergrad learns at some point or the other. This chapter covers a beautiful procedure involving Gram-Schmidt. Gram-Schmidt is basically a procedure to generate orthonormal lists from linear independent lists. So, if you can come up with a linear independent list in the space you are interested in, you can easily generate orthonormal lists.

For example, If you want to approximate sin(x) using ( 1, x1 , x2 , x3 , x4, , x5 ) , then use Gram-Schmidt to generate an orthonormal basis. Once you decide on orthonormal list (e1, e2, e3, e4, e5) , then you can use it to project the function sin(x) on the space spanned by the orthonormal list.

In the case of sin(x) the expansion using projection is better than the expansion by Taylor series which is calculus based, for a few terms. This example allows you to see the connections amongst linear independence, orthonormal vectors, inner-product, projection, functional representation on a projected subspace using Gram-Schmidt . A memorable example which will make the reader forever remember these concepts.

Chapter 7: Operators on Inner product Spaces

Once the inner product space is defined, there are various operators that can be defined on this space. This chapter introduces self-adjoint operator, normal operator, positive operator, Isometry operator, square root operator. Characteristics of each of this operator is investigated. One thing that strikes you if you have not taken the linear transformation approach before is that all the analysis that is taught with respect to matrices comes alive when looked from the lens of operator. The chapter ends with the discussion of Singular value decomposition, the application of which can be found in tons of domains. Google search engine, for one, heavily uses SVD.

The highlight of this chapter is the spectral theorem relevant to complex and real dimensional vector space. The connection between these theorems and operators is the key in understanding the rationale behind the existence of orthonormal basis of either of the spaces.

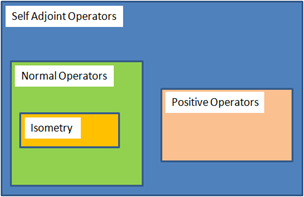

By explaining various properties of operators, one can get a visual picture of various operators. A normal operator is always a Self adjoint operator. An isometric operator is always a normal operator. A positive operator is by definition a Self-adjoint operator under some restrictions. Here is my naive representation of the operator structure :

What is the point in understanding these operators ? Next time you see a matrix , you might pause and understand the structure than merely subjecting it to some algorithm for diagnolization / eigen value decomposition. By spending some time on the structure on the matrix, you get a better insight in to the problem at hand.

Chapter 8 : Operators on Complex vector Spaces

The chapter starts off with generalized eigen vectors. The rationale for considering these vectors is that not all operators have ENOUGH eigen vectors that give rise to ENOUGH invariant subspaces. Lack of enough eigen vectors will prevent writing a vector as a direct sum of subspaces.

By defining generalized eigen vectors, one can decompose the vector space as a direct sum

The beauty of considering generalized eigen vectors is that a decomposition exists for every operator on a complex vector space. For a specific transformation T, every basis of V with respect to T has a upper triangular matrix such that the eigen value appears on the diagonal precisely ![]() .

.

The above statement connects the following concepts: Generalized eigen vector space , dimension, nilpotent operators and the upper triangular matrix of T. Thus multiplicity of eigen value has a good geometric meaning, i.e it represents the dimension of the generalized eigen vector space. This is a refreshing way to look at multiplicity than merely looking at repeated roots of a characteristic equation obtained by taking determinant approach. Minimal polynomial is then introduced in the chapter and the connection is made between characteristic equation and minimal polynomial. Jordan basis are then introduced thus showing that there exists an alternate basis to orthonormal basis that are also equally powerful.

Chapter 9 : Operators on Real Vector Spaces

For all the theorems applicable in the complex finite dimensional vector space, an extension to the real finite dimensional vector space is made in this chapter. Ironically, theorems in complex space are simpler, neater and easier to work with, than the real space . For almost all the theorems, there is added complexity that is appended for real vector space.

Chapter 10 : Trace and Determinant

This chapter is the main reason for the author delving on various theorems in the first 9 chapters of the book. Concepts of Trace and Determinant find their way in to math courses sans the intuition/ geometric interpretation of the same. By treating finite dimensional vector spaces and various operators, the author provides the reader with a specific kind of thinking to appreciate the concepts of trace and determinants. Diagnolization is taught in every linear algebra course but the reason for diagonalization is never given. After reading this chapter, you will develop a fresh perspective towards the entire business of diagonalization.

Trace is introduced for an operator via the characteristic equation and then is extended to the matrix of the linear operator. Similarly determinant is defined for the operator via the characteristic equation and then extended to the matrix of the linear operator. The highlight of this chapter is the computation of determinant using the finite dimensional vector space concepts. The chapter ends with deriving the change of variable formula using operator theory.

This is THE BEST linear algebra book that I have read till date. By providing the rationale behind matrices using linear operators, this book opens the reader’s eyes to “What lies behind every matrix?” , “What is going on behind the various decomposition algorithms ?“ .

My motivation to read this book was to understand inner product spaces, more specifically, as a preparation for working on Hilbert Spaces, but this book gave me MUCH more than I had expected. This is one of the rare books which demonstrates quite vividly, the principle : “Power of Math lies in Abstraction.”

Leave a comment